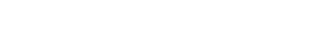

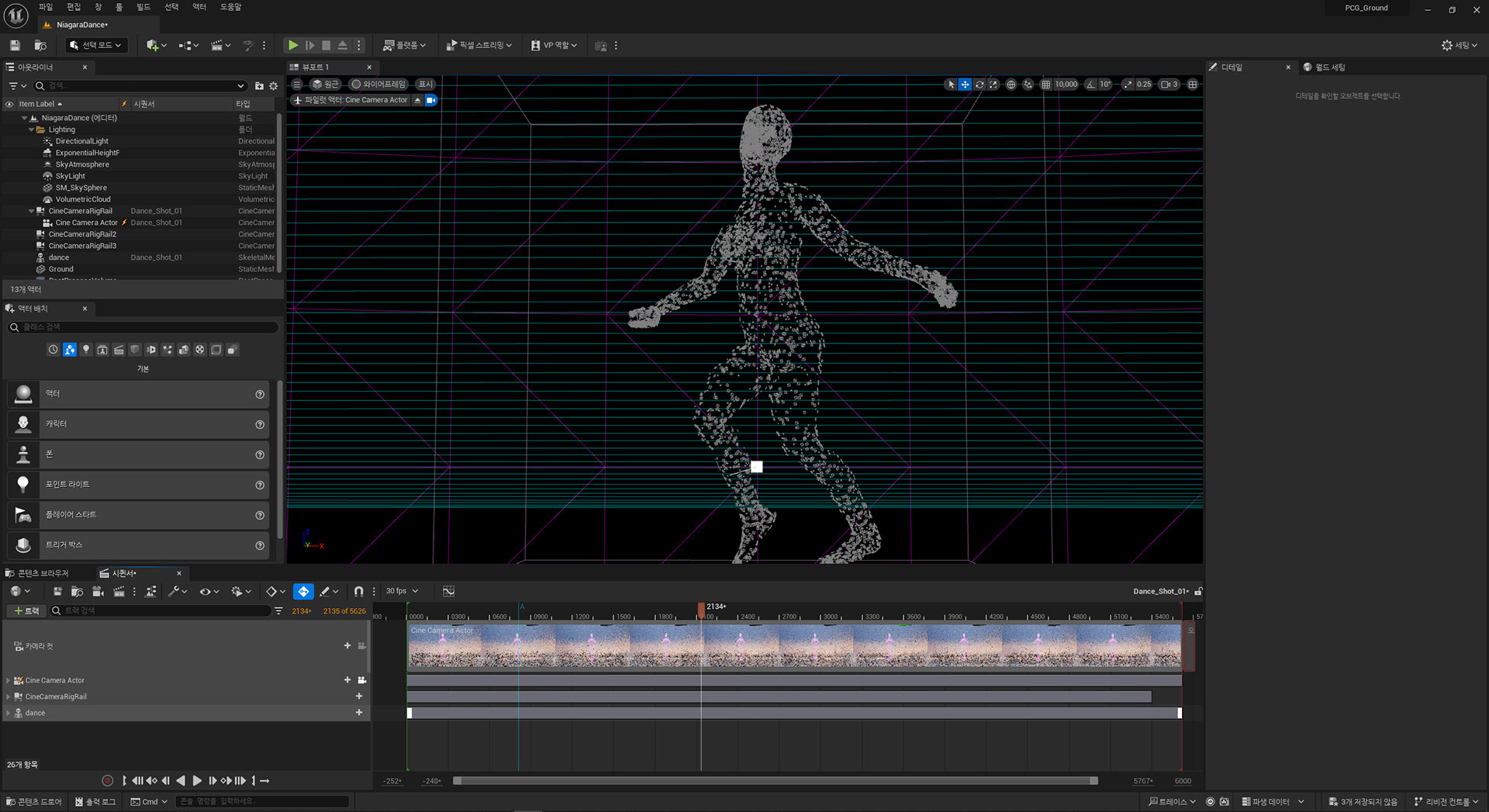

언리얼 엔진의 나이아가라 파티클 시스템을 활용해 파티클을 방출하는 춤추는 인물을 구현했습니다.

캐릭터의 움직임을 실시간으로 분석해, 동작이 클수록 그리고 에너지가 강할수록 더 많은 파티클이 방출되도록 설계했으며,신체의 움직임이 곧 시각적 표현으로 확장되도록 구성했습니다.

음악의 고조에 따라 파티클은 점진적으로 화면을 채워 나가며, 사운드가 지닌 상승 에너지를 역동적인 시각적 움직임으로 변환합니다. 이 프로젝트는 단순한 댄스 시각화를 넘어, 음악·움직임·파티클이 하나의 표현 시스템으로 융합된 몰입형 경험을 목표로 하며, 청각적 리듬과 감정을 생생한 시각 언어로 번역하는 데 초점을 맞추고 있습니다.